https://semver.org/ A proposal to create some formalization around what the X.Y.Z terms mean in a version number and thus, make it clear as to which APIs are backwards compatible and which are not (APIs take in version number as an argument)

Tuesday, November 20, 2018

Friday, November 2, 2018

Wednesday, October 31, 2018

Facebook Infra Overview

http://www.ece.lsu.edu/hpca-18/files/HPCA2012_Facebook_Keynote.pdf Liked this. Making a note.

Friday, October 5, 2018

Arguments against OOP

https://caseymuratori.com/blog_0015 "The fallacy of “object-oriented programming” is exactly that: that code is at all “object-oriented”. It isn’t. Code is procedurally oriented, and the “objects” are simply constructs that arise that allow procedures to be reused. So if you just let that happen instead of trying to force everything to work backwards, programming becomes immensely more pleasant."

Thursday, September 27, 2018

HAProxy internals

http://www.haproxy.org/

Things to follow up on:

(1) How is zero copy done? Using the "splice" system call on Linux

(2) MRU memory allocator

(3) Accepting multiple accepts at the same time across different processing listening to different ports of course.

(4) Tree based storage e.g.making heavy use of the Elastic Binary Tree http://wtarreau.blogspot.com/2011/12/elastic-binary-trees-ebtree.html

Things to follow up on:

(1) How is zero copy done? Using the "splice" system call on Linux

(2) MRU memory allocator

(3) Accepting multiple accepts at the same time across different processing listening to different ports of course.

(4) Tree based storage e.g.making heavy use of the Elastic Binary Tree http://wtarreau.blogspot.com/2011/12/elastic-binary-trees-ebtree.html

Thursday, June 7, 2018

Tuesday, June 5, 2018

Design patterns in C++

https://github.com/JakubVojvoda/design-patterns-cpp liked this. noted.

Factory pattern:

https://www.oodesign.com/factory-pattern.html

Factory pattern:

https://www.oodesign.com/factory-pattern.html

Wednesday, April 4, 2018

Blog on machine learning

Came across what some people say as one of the best places to learn about machine learning. Making a note: https://colah.github.io/

Sunday, March 4, 2018

Mobile Real-time video segmentation

Came across this blog from google about how they have managed to find out the background in a video. https://research.googleblog.com/2018/03/mobile-real-time-video-segmentation.html is the the link to the google research paper about this and https://people.eecs.berkeley.edu/~jonlong/long_shelhamer_fcn.pdf is the paper they have built upon. I have to look into this soon. Adding as a follow up.

Monday, February 19, 2018

Monday, January 22, 2018

AI Magazine

https://www.aaai.org/ojs/index.php/aimagazine/index

What got me interested in this is : https://www.quora.com/What-is-the-biggest-unresolved-problem-for-AI, which is the quest for a general purpose intelligence i.e. building systems that can help us come up with the next "theory of relativity", or think like a human etc. Today's AI is mostly focused on classification

What got me interested in this is : https://www.quora.com/What-is-the-biggest-unresolved-problem-for-AI, which is the quest for a general purpose intelligence i.e. building systems that can help us come up with the next "theory of relativity", or think like a human etc. Today's AI is mostly focused on classification

Thursday, January 18, 2018

The set-theoretic multiverse

I came across this somewhat intriguing idea of a set theoretic multiverse. I want to try to find out the links between a set theoretical multiverse and logics. As i look at it, I see logic as a theory based on the rules of set theory to propagate labels such as truth/false. What I am curious about is whether if there are more than 1 foundational set theory - does that lead to many logics .

http://lumiere.ens.fr/~dbonnay/files/talks/hamkins.pdf

http://lumiere.ens.fr/~dbonnay/files/talks/hamkins.pdf

Monday, May 22, 2017

Doxatic logic & types of reasoners

https://en.wikipedia.org/wiki/Doxastic_logic

Something i read long time back when i read Smullyan's books, but making a note because i love it. His types of reasoners is beautiful :)

Something i read long time back when i read Smullyan's books, but making a note because i love it. His types of reasoners is beautiful :)

Types of reasoners[edit]

To demonstrate the properties of sets of beliefs, Raymond Smullyan defines the following types of reasoners:

- Accurate reasoner:[1][2][3][4] An accurate reasoner never believes any false proposition. (modal axiom T)

-

- A conceited reasoner with rationality of at least type 1 (see below) will necessarily lapse into inaccuracy.

- Consistent reasoner:[1][2][3][4] A consistent reasoner never simultaneously believes a proposition and its negation. (modal axiom D)

- Normal reasoner:[1][2][3][4] A normal reasoner is one who, while believing

also believes he or she believes p (modal axiom 4).

- Peculiar reasoner:[1][4] A peculiar reasoner believes proposition p while also believing he or she does not believe

Although a peculiar reasoner may seem like a strange psychological phenomenon (see Moore's paradox), a peculiar reasoner is necessarily inaccurate but not necessarily inconsistent.

- Reflexive reasoner:[1][4] A reflexive reasoner is one for whom every proposition

has some proposition

such that the reasoner believes

.

- If a reflexive reasoner of type 4 [see below] believes

, he or she will believe p. This is a parallelism of Löb's theorem for reasoners.

- Unstable reasoner:[1][4] An unstable reasoner is one who believes that he or she believes some proposition, but in fact does not believe it. This is just as strange a psychological phenomenon as peculiarity; however, an unstable reasoner is not necessarily inconsistent.

- Stable reasoner:[1][4] A stable reasoner is not unstable. That is, for every

if he or she believes

then he or she believes

Note that stability is the converse of normality. We will say that a reasoner believes he or she is stable if for every proposition

he or she believes

(believing: "If I should ever believe that I believe

then I really will believe

").

- Modest reasoner:[1][4] A modest reasoner is one for whom every believed proposition

,

only if he or she believes

. A modest reasoner never believes

unless he or she believes

. Any reflexive reasoner of type 4 is modest. (Löb's Theorem)

Curry Howard Correspondence

Came across this which is a link between computer programs and logic, in the sense that using computer programs to "prove" things is legit. My interpretation of this is that it shows that constructs we use in computers languages can solve the same problems we solve using logic --

https://www.quora.com/Why-is-the-Curry-Howard-isomorphism-interesting

Not really a big deal, but something to make a note of, for future reference.

https://www.quora.com/Why-is-the-Curry-Howard-isomorphism-interesting

Not really a big deal, but something to make a note of, for future reference.

Saturday, May 20, 2017

Wednesday, April 19, 2017

Thursday, February 23, 2017

2 types of CS people

Old one from Douglas Comer, but still agree:

https://www.cs.purdue.edu/homes/dec/essay.criticize.html

https://www.cs.purdue.edu/homes/dec/essay.criticize.html

Wednesday, January 4, 2017

Software Products someone needs to develop

- Given a program, create a flowchart out of it.

- https://code2flow.com/ is one software which already does this. There may many approaches to building the UI, scaling the backend etc.

- Software idea generator. Create a list of software ideas e.g. Object Oriented, Operating system, Distributed, Data Store, Functional, Classification, Image Recognition, Ad Placement, Billing, etc. The point is that one first needs to come up with a design for this.

- Automatically convert from one programming language to another -- cross compiler. Difficult problem to solve in general

Sunday, October 16, 2016

v8

To begin , came across this, which I liked: https://archrometects.files.wordpress.com/2009/10/assignment-01-conceptual-architecture-of-google-chrome-archrometects.pdf

Friday, October 7, 2016

Python Global interpreter lock !

http://www.dabeaz.com/python/UnderstandingGIL.pdf for reference. Amazed. I was falsely under the impression that my python pthreads were speeding things up :S

Monday, September 19, 2016

Coding challenges

http://eudyptula-challenge.org/ Some hello-world like challenges for linux kernel

http://cryptopals.com/ similar challenges for cryptography

http://cryptopals.com/ similar challenges for cryptography

Sunday, September 11, 2016

Wednesday, August 17, 2016

Wednesday, May 11, 2016

Thursday, May 5, 2016

On Software Architecture

Going to make a list of common software architecture patterns, and how does one go about choosing one over another. Here are a few links about this: I think some of these things are overlapping e.g. one can create a layered model using an event driven model for parts of the layer.

http://techbeacon.com/top-5-software-architecture-patterns-how-make-right-choice, where they are listing the models as

https://manohars.files.wordpress.com/2009/11/97-things-every-software-architect-should-know.pdf

http://techbeacon.com/top-5-software-architecture-patterns-how-make-right-choice, where they are listing the models as

- Layered/n-tier model

- Event driven model

- Microkernel/Plug-in Architecture

- Microservices Architecture

- Space based architecture/Cloud Architecture

Also came across this interesting book about software architecture paradigms. I believe this would be very useful when it comes to understanding and designing software systems

https://manohars.files.wordpress.com/2009/11/97-things-every-software-architect-should-know.pdf

Wednesday, April 6, 2016

How big is the Linux Kernel

http://superuser.com/questions/370586/how-can-a-linux-kernel-be-so-small

Early Linux distributions such as Tom's Root-Boot, MuLinux, Diet-router, (the now defunct) LOAFand many others now departed, all fitted a working Linux system on to one or two 1.44 MB diskettes.

The Linux kernel has grown but don't forget it is modular, kernel modules are loaded as needed. Thus it is still possible to produce a Linux distribution with a very small footprint.

See: Kernel Size Tuning Guide - eLinux.org

If you read Linux_tiny.pdf you will see this

historic 0.99pl15 kernel: Slackware 1.1.2, 1994 301K

Fedora Core 2 1.2M

SuSE 9.1 1.5M

2.6.5-tiny1 test config: IDE, ext2, TCP, NIC 363K

Early Linux distributions such as Tom's Root-Boot, MuLinux, Diet-router, (the now defunct) LOAFand many others now departed, all fitted a working Linux system on to one or two 1.44 MB diskettes.

The Linux kernel has grown but don't forget it is modular, kernel modules are loaded as needed. Thus it is still possible to produce a Linux distribution with a very small footprint.

See: Kernel Size Tuning Guide - eLinux.org

If you read Linux_tiny.pdf you will see this

historic 0.99pl15 kernel: Slackware 1.1.2, 1994 301K

Fedora Core 2 1.2M

SuSE 9.1 1.5M

2.6.5-tiny1 test config: IDE, ext2, TCP, NIC 363K

Thursday, March 31, 2016

Variation to a theme: Subtraction

Found this pretty cool. Making a note:

http://mathmisery.com/wp/2016/02/21/subtraction-a-variation-on-a-theme/

http://mathmisery.com/wp/2016/02/21/subtraction-a-variation-on-a-theme/

Thoughts on async programming and Coroutines vs Generators vs Continuations

Here is my understanding of these related ideas.

Here is a model for async programming , where the + is the event loop. I think that one could use such kind of diagrams to model a async call model e.g. as one finds in Javascript, or as one constructs using libevent.

On a slightly different topic about continuations, co-routines, generators and functions, i look upon them as the following. This is still work in progress and I will update as my understanding improves. Something to begin with as a note:

Following is a quick note about how generators exist in python:

Generators in Python:

I have worked with generators in the context of RtmpLite

Generators are used to write non-blocking I/O in synchronous style without all the nesting etc that comes with asynchronous programming. (ref: http://calculist.org/blog/2011/12/14/why-coroutines-wont-work-on-the-web/) . As shown below, the sleep(1000) will invoke a timer on the event loop and the control will yield back to the caller. Eventually, when the timer event triggers it will be added to the event queue and be executed starting off from where it left.

Here is a model for async programming , where the + is the event loop. I think that one could use such kind of diagrams to model a async call model e.g. as one finds in Javascript, or as one constructs using libevent.

On a slightly different topic about continuations, co-routines, generators and functions, i look upon them as the following. This is still work in progress and I will update as my understanding improves. Something to begin with as a note:

Following is a quick note about how generators exist in python:

Generators in Python:

I have worked with generators in the context of RtmpLite

Generators are used to write non-blocking I/O in synchronous style without all the nesting etc that comes with asynchronous programming. (ref: http://calculist.org/blog/2011/12/14/why-coroutines-wont-work-on-the-web/) . As shown below, the sleep(1000) will invoke a timer on the event loop and the control will yield back to the caller. Eventually, when the timer event triggers it will be added to the event queue and be executed starting off from where it left.

1 2 3 4 5 | Task(function() { console.log('wait... ' + new Date); yield sleep(1000); console.log('ok... ' + new Date); }).run(); |

Monday, February 15, 2016

Software Systems engineering; old vs new

Liked this discussion. Making a note : https://news.ycombinator.com/item?id=11099925

Tuesday, January 26, 2016

Reverse Turing Test

Anyone aware of a reverse Turing test ? I.e. how to prove that one is a machine -- please let me know.

Btw, on the topic here is a funny paper i came across: https://www.msu.edu/~pennock5/courses/ALife/Striegel_Failed_Turing_test.pdf

(also follow up on this game: http://www.engadget.com/2015/04/28/spyparty-new-characters-indie-aa/)

Btw, on the topic here is a funny paper i came across: https://www.msu.edu/~pennock5/courses/ALife/Striegel_Failed_Turing_test.pdf

(also follow up on this game: http://www.engadget.com/2015/04/28/spyparty-new-characters-indie-aa/)

Friday, October 30, 2015

Tuesday, August 25, 2015

Tuesday, June 16, 2015

Friday, May 8, 2015

Lock modes

Picking it from Wikipedia (Distributed Lock Manager). Making a note basically:

Lock modes[edit]

A process running within a VMSCluster may obtain a lock on a resource. There are six lock modes that can be granted, and these determine the level of exclusivity of been granted, it is possible to convert the lock to a higher or lower level of lock mode. When all processes have unlocked a resource, the system's information about the resource is destroyed.

- Null (NL). Indicates interest in the resource, but does not prevent other processes from locking it. It has the advantage that the resource and its lock value block are preserved, even when no processes are locking it.

- Concurrent Read (CR). Indicates a desire to read (but not update) the resource. It allows other processes to read or update the resource, but prevents others from having exclusive access to it. This is usually employed on high-level resources, in order that more restrictive locks can be obtained on subordinate resources.

- Concurrent Write (CW). Indicates a desire to read and update the resource. It also allows other processes to read or update the resource, but prevents others from having exclusive access to it. This is also usually employed on high-level resources, in order that more restrictive locks can be obtained on subordinate resources.

- Protected Read (PR). This is the traditional share lock, which indicates a desire to read the resource but prevents other from updating it. Others can however also read the resource.

- Protected Write (PW). This is the traditional update lock, which indicates a desire to read and update the resource and prevents others from updating it. Others with Concurrent Read access can however read the resource.

- Exclusive (EX). This is the traditional exclusive lock which allows read and update access to the resource, and prevents others from having any access to it.

The following truth table shows the compatibility of each lock mode with the others:

| Mode | NL | CR | CW | PR | PW | EX |

|---|---|---|---|---|---|---|

| NL | Yes | Yes | Yes | Yes | Yes | Yes |

| CR | Yes | Yes | Yes | Yes | Yes | No |

| CW | Yes | Yes | Yes | No | No | No |

| PR | Yes | Yes | No | Yes | No | No |

| PW | Yes | Yes | No | No | No | No |

| EX | Yes | No | No | No | No | No |

Thursday, May 7, 2015

Root Certificate, Proxy and HTTPS (and HTTPS for CDN)

Quick note as to how proxies (such as CDN caching machines) can use HTTPS to enact a Man in the Middle.

http://security.stackexchange.com/questions/8145/does-https-prevent-man-in-the-middle-attacks-by-proxy-server

End client lets say trying to talk HTTPS to google.com through a proxy (e.g. a CDN server). In this case, if the proxy sends its own certificate as a "root" certificate either signed by itself, or signed by a trusted third party, and if that certificate is accepted by the client, then when the client tries to contact google.com, client will first look at google.com's certificate, but which in this case would be given by the proxy to the client and which uses proxy's public key for encryption. When the client encrypts using the proxy's public key, the proxy can decrypt and then send it over to google using google's public key (which came through the certificate that was sent by google to the proxy). This way, the proxy can intercept all HTTPS communication.

http://security.stackexchange.com/questions/8145/does-https-prevent-man-in-the-middle-attacks-by-proxy-server

End client lets say trying to talk HTTPS to google.com through a proxy (e.g. a CDN server). In this case, if the proxy sends its own certificate as a "root" certificate either signed by itself, or signed by a trusted third party, and if that certificate is accepted by the client, then when the client tries to contact google.com, client will first look at google.com's certificate, but which in this case would be given by the proxy to the client and which uses proxy's public key for encryption. When the client encrypts using the proxy's public key, the proxy can decrypt and then send it over to google using google's public key (which came through the certificate that was sent by google to the proxy). This way, the proxy can intercept all HTTPS communication.

Monday, April 13, 2015

effective coding habits

http://www.infoq.com/presentations/7-ineffective-coding-habits kind of silly but still pretty useful

Wednesday, April 8, 2015

Thursday, February 19, 2015

Quick Recap of J2EE

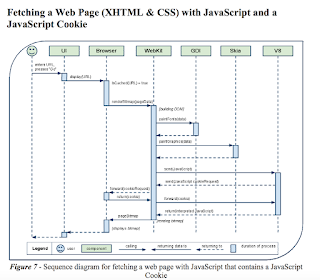

As can be seen here, a request for getting a jsp first reaches a web-server (e.g. apache). Apache checks up its virtualhost for the specified URL, identifies the document root, picks up the JSP, and then Apache then hands over the request via module mod_jk to Tomcat, whose job it is to do the following

One can even look upon the processing as follows (taken from Wikipedia)

If one is looking at things from the J2EE model, one typically works with a MVC relationship between classes - the relationship works as follows (ill put up a picture on this soon).

Monday, February 16, 2015

Software Engineering

Making a list of some software engineering concepts and principles ive come across.

- Decorator (wrapper where API of object being used is same as exposed)

- Adaptor (wrapper but where API of object being used is different from exposed API)

- Singleton (static object, private constructor)

- Factory (when e.g. one needs to create an Matrix of different types based on the type specified in the constructor e.g.). See this

- Inversion of control e.g. the same example i described above for factory can be better implemented when each Matrix type is implementing an interface (or extending virtual classes) and when the factory class's constructor is created, the concrete object is passed, and then appropriate functions can be called). See this

- Definition of "static" keyword in C++: this keyword has 3 uses. limit scope within translation unit, retain value across function calls, do not be part of object instances

Wednesday, December 3, 2014

Thread Specific heap/tcmalloc

Wanted to write this down somewhere for some time, so doing it now.

As i have touched upon before (and as i understand), user-level threads share the data section of its parent process, as well as the heap of the parent process (note that when a process is "Executed" by the kernel, the kernel allocates an area to execute consisting of the text segment, data segment (memory arena). Because all threads share the heap, the allocation (e.g. done using malloc (in turn using brk or mmap) ) in the heap needs to have a lock, and this is a costly operation. Every time malloc/free is called from any of the threads, a lock has to be taken, then memory allocated/deallocated, while other threads wait on the lock.

With tcmalloc, i would expect that the heap is broken up into parts so that each thread can use only its part of the heap and there is also a centralized heap where the thread will try to grab data from in case of running out of memory on its local heap.

(btw, just while on the topic, making a quick note about Valgrind's issues with tcmalloc: from valgrind man page: "When a shared library is loaded, Valgrind checks for functions in the library that must be replaced or wrapped. For example, Memcheck replaces all malloc related functions (malloc, free, calloc, ...) with its own versions. Such replacements are done by default only in shared libraries whose soname matches a predefined soname pattern (e.g.libc.so* on linux). By default, no replacement is done for a statically linked library or for alternative libraries such as tcmalloc. In some cases, the replacements allow --soname-synonyms to specify one additional synonym pattern, giving flexibility in the replacement".)

As i have touched upon before (and as i understand), user-level threads share the data section of its parent process, as well as the heap of the parent process (note that when a process is "Executed" by the kernel, the kernel allocates an area to execute consisting of the text segment, data segment (memory arena). Because all threads share the heap, the allocation (e.g. done using malloc (in turn using brk or mmap) ) in the heap needs to have a lock, and this is a costly operation. Every time malloc/free is called from any of the threads, a lock has to be taken, then memory allocated/deallocated, while other threads wait on the lock.

With tcmalloc, i would expect that the heap is broken up into parts so that each thread can use only its part of the heap and there is also a centralized heap where the thread will try to grab data from in case of running out of memory on its local heap.

(btw, just while on the topic, making a quick note about Valgrind's issues with tcmalloc: from valgrind man page: "When a shared library is loaded, Valgrind checks for functions in the library that must be replaced or wrapped. For example, Memcheck replaces all malloc related functions (malloc, free, calloc, ...) with its own versions. Such replacements are done by default only in shared libraries whose soname matches a predefined soname pattern (e.g.libc.so* on linux). By default, no replacement is done for a statically linked library or for alternative libraries such as tcmalloc. In some cases, the replacements allow --soname-synonyms to specify one additional synonym pattern, giving flexibility in the replacement".)

Tuesday, November 11, 2014

Saturday, October 18, 2014

Rate Control/Traffic Policing/Traffic Shaping

Again, nothing new and something quite well known in the networking literature (maybe networking 101 for some) but putting it out here just as a reference because i think this is used pretty commonly in the networking industry and folks should be aware about this.

Let's say the goal is to rate control events (an event can be anything like number of hits, or number of clicks or say number of bytes delivered etc.) so that incoming traffic can be handled without overwhelming one's servers. The most commonly used (in my experience) algorithm for rate control (shaping, policing) is the token-bucket or leaky bucket based on one's requirements. However, one may think about trying to use other algorithms e.g. a sliding window. Here are the pros and cons of each approach:

Let's say the goal is to rate control events (an event can be anything like number of hits, or number of clicks or say number of bytes delivered etc.) so that incoming traffic can be handled without overwhelming one's servers. The most commonly used (in my experience) algorithm for rate control (shaping, policing) is the token-bucket or leaky bucket based on one's requirements. However, one may think about trying to use other algorithms e.g. a sliding window. Here are the pros and cons of each approach:

- Sliding Window: In this approach, we specify the rate in the sliding window that we will accept .e.g B events in the window, and anything in excess will be dropped. This approach has the problem as shown below that it cannot handle burst properly. Especially in a pathological case, this algorithm has the risk of shaping the traffic incorrectly over a long duration. As one can see in the picture below, one reason burst could not be handled well was because our sliding window slid 1 slot at a time. If instead we slide a random amount, we have effectively constructed a "randomized algorithm", that would not be 100% accurate rate control but which will be on average expected to be pretty close to the expected rate. Further, one can combine the sliding window approach with the token bucket approach as outlined below.

- Leaky Bucket algorithm: In this algorithm, a "bucket" data structure is created, which can be implemented as a queue and packets are inserted into the queue when they arrive, and are taken out at a predefined fixed rate. If an egress event occurs and there is nothing in the queue, we say that an "underrun" has occurred. If there are too many packets in the queue, specified by a size of the queue, we say that an "overrun" has occurred. The question of how big a burst size can be is configurable and is called as the sustainable cell rate (or sustainable bitrate, or sustainable event rate). [Ref: Wikipedia, Image credits, Wikpedia]. This is useful as a jitter buffer i.e. a buffer to eliminate jitter i.e. packets/events come in at whatever rate , but they will go out at a fixed rate.

- Token Bucket algorithm: In this approach, tokens are generated at a constant rate (or whatever rate one wants), and then arriving events/data is queued up and then when a packet is supposed to be dequeued (See *). When the packet is dequeued, number of events associated with that packet are pulled out of the token bucket. [Image credits: this]. * Typically there is some logic for dequeuing, e.g. (A) when trying to dequeue media packets that have a timestamp .e.g. a PTS in a MPEG2 stream, the packets will sit in the queue till the system wall-clock time/timestamp reaches the PTS of the packet (B) Sometimes networking queues are implemented as simply list-driven e.g. reorder queue: one can keep a counter for the expected packet count, and keep egressing the packets that arrive in order. When a packet arrives out of order (greater than the one expected), the packets are queued up in a sorted queue. When the expected packet arrives, elements in the queue that are in order are dequeued (and passed to token check stage). Note that the token bucket algorithm can also run into problems with bursty traffic. One may need to have a token debt- algorithm to deal with certain scenarios (http://www.google.com/patents/US20110075562 where if the token count becomes negative, the egress is stalled till the count becomes positive again. This is good for shaping. What about Policing using token bucket ? That can be handled by having a lower bound for the debt. Our rate control/policer can only work in those bounds of debt. For policing, there could be a long-term debt checker, which if non-zero guarantees that our algorithm is token-non-negative, which means that the given stream is within bounds. If not, we drop.

- Hybrids: one can devise hybrids e.g. leaky bucket + token bucket, where the data incoming out of a leaky bucket comes at a fixed rate, and goes into the token bucket queue at that fixed rate and rate control and policing can be done at the outgoing packet level. For Policing, The Sliding window approach can also be combined with token bucket, where some number of tokens are generated every second, say B tokens/sec, so that way the burst shown in that example will go through, but had there been another burst (3rd), then that would be dropped. Thus, we would need to have negative debt tokens as described in the point 3 above.

Of course, when implementing these, one needs some kind of timer mechanism, and one can use the commonly used libevent to create timers using evtimer_new(), and evtimer_add() etc. Note that libevent's timing mechanism is heap based where insertions and deletions are O(lgn). (see minheap-internal.h)

One can of course, also implement timers using timing wheels: e.g. this http://25thandclement.com/~william/projects/timeout.c.html, where the insertions and deletions are O(1).

Subscribe to:

Posts (Atom)

![{\displaystyle {\mathcal {B}}[\neg \exists p(\neg p\wedge {\mathcal {B}}p)]\quad {\text{or}}\quad {\mathcal {B}}[\forall p({\mathcal {B}}p\to p)]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f49ff5b420b72caee5e98ea60b09ecd5ce561e41)

.png)